1. Translation Processing Time

By those who haven't learned and used English a lot, messages can be understood easier and faster if those are in their own language. Apache Tomcat project has the following message in English as an example (ref: https://github.com/apache/tomcat/blob/master/java/org/apache/catalina/core/LocalStrings.properties#L20):

applicationContext.addListener.iae.sclNotAllowed=Once the first ServletContextListener has been called, no more ServletContextListeners may be added.

applicationContext.addListener.iae.sclNotAllowed=첫번째 ServletContextListener가 호출되고 나면, 더 이상 ServletContextListener들을 추가할 수 없습니다.

Of course, the translation should be correct. Long time ago, the books published by the "ㅅ" publisher company in South Korea, were very difficult to understand even if those were translated into Korean. Perhaps someone had enough English skills but had never experienced in software development or had never asked proficient engineers to review the translations before publication. I don't think it happened only in IT field. Whether they were about Economics or Statistics, some books (translated) in Korean were harder to understand. Somethings were out of context, with terminologies that were never used in real practices, with weird combinations of Chinese characters to make up new words, or with unnatural passive voices from too strict literal translations. So, some people used to try to read the original books in English instead, or some others had to rush in head first, including myself.

One thing clear to me is that once those are translated into correct words, it saves a lot of time in translation process that many people have to spend in otherwise. The more popular software, the more values of correct translation to people.

2. Apache Tomcat Translation with Korean examples

Since Apache Tomcat 9.0.15, almost every English message has been translated into Korean. If you set the default language of the JVM to Korean (`CATALINA_OPTS="-user.country=KR -Duser.language=ko") like the following example, you can see all the internal information, warning or error messages in Korean. I ran Apache Tomcat simply with `bin/catalina.sh run` below.

$ export CATALINA_OPTS="-Duser.country=KR -Duser.language=ko"

$ bin/catalina.sh run

Using CATALINA_BASE: /Users/tester/tomcat

Using CATALINA_HOME: /Users/tester/tomcat

Using CATALINA_TMPDIR: /Users/tester/tomcat/temp

...

24-Apr-2019 23:51:08.477 정보 [main] org.apache.catalina.startup.VersionLoggerListener.log 서버 버전 이름: Apache Tomcat/9.0.18-dev24-Apr-2019 23:51:08.481 정보 [main] org.apache.catalina.startup.VersionLoggerListener.log Server 빌드 시각: Apr 20 2019 19:48:52 UTC

24-Apr-2019 23:51:08.481 정보 [main] org.apache.catalina.startup.VersionLoggerListener.log Server 버전 번호: 9.0.18.0

24-Apr-2019 23:51:08.481 정보 [main] org.apache.catalina.startup.VersionLoggerListener.log 운영체제 이름: Mac OS X

24-Apr-2019 23:51:08.481 정보 [main] org.apache.catalina.startup.VersionLoggerListener.log 운영체제 버전: 10.14.4

24-Apr-2019 23:51:08.481 정보 [main] org.apache.catalina.startup.VersionLoggerListener.log 아키텍처: x86_64

24-Apr-2019 23:51:08.481 정보 [main] org.apache.catalina.startup.VersionLoggerListener.log 자바 홈: /Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre

24-Apr-2019 23:51:08.481 정보 [main] org.apache.catalina.startup.VersionLoggerListener.log JVM 버전: 1.8.0_144-b01

24-Apr-2019 23:51:08.481 정보 [main] org.apache.catalina.startup.VersionLoggerListener.log JVM 벤더: Oracle Corporation

24-Apr-2019 23:51:08.481 정보 [main] org.apache.catalina.startup.VersionLoggerListener.log CATALINA_BASE: /Users/tester/tomcat

24-Apr-2019 23:51:08.481 정보 [main] org.apache.catalina.startup.VersionLoggerListener.log CATALINA_HOME: /Users/tester/tomcat

...

24-Apr-2019 23:51:08.488 정보 [main] org.apache.catalina.core.AprLifecycleListener.lifecycleEvent 프로덕션 환경들에서 최적의 성능을 제공하는, APR 기반 Apache Tomcat Native 라이브러리가, 다음 java.library.path에서 발견되지 않습니다: [/Users/tester/Library/Java/Extensions:/Library/Java/Extensions:/Network/Library/Java/Extensions:/System/Library/Java/Extensions:/usr/lib/java:.]24-Apr-2019 23:51:08.749 정보 [main] org.apache.coyote.AbstractProtocol.init 프로토콜 핸들러 ["http-nio-8080"]을(를) 초기화합니다.

24-Apr-2019 23:51:08.775 정보 [main] org.apache.coyote.AbstractProtocol.init 프로토콜 핸들러 ["ajp-nio-8009"]을(를) 초기화합니다.

24-Apr-2019 23:51:08.778 정보 [main] org.apache.catalina.startup.Catalina.load [526] 밀리초 내에 서버가 초기화되었습니다.

24-Apr-2019 23:51:08.806 정보 [main] org.apache.catalina.core.StandardService.startInternal 서비스 [Catalina]을(를) 시작합니다.

24-Apr-2019 23:51:08.807 정보 [main] org.apache.catalina.core.StandardEngine.startInternal 서버 엔진을 시작합니다: [Apache Tomcat/9.0.18-dev]

24-Apr-2019 23:51:08.814 정보 [main] org.apache.catalina.startup.HostConfig.deployDirectory 웹 애플리케이션 디렉토리 [/Users/tester/tomcat/webapps/docs]을(를) 배치합니다.

24-Apr-2019 23:51:09.052 정보 [main] org.apache.catalina.startup.HostConfig.deployDirectory 웹 애플리케이션 디렉토리 [/Users/tester/tomcat/webapps/docs]에 대한 배치가 [237] 밀리초에 완료되었습니다.

24-Apr-2019 23:51:09.055 정보 [main] org.apache.catalina.startup.HostConfig.deployDirectory 웹 애플리케이션 디렉토리 [/Users/tester/tomcat/webapps/manager]을(를) 배치합니다.

24-Apr-2019 23:51:09.117 정보 [main] org.apache.catalina.startup.HostConfig.deployDirectory 웹 애플리케이션 디렉토리 [/Users/tester/tomcat/webapps/manager]에 대한 배치가 [62] 밀리초에 완료되었습니다.

24-Apr-2019 23:51:09.117 정보 [main] org.apache.catalina.startup.HostConfig.deployDirectory 웹 애플리케이션 디렉토리 [/Users/tester/tomcat/webapps/examples]을(를) 배치합니다.

24-Apr-2019 23:51:09.911 정보 [main] org.apache.catalina.startup.HostConfig.deployDirectory 웹 애플리케이션 디렉토리 [/Users/tester/tomcat/webapps/examples]에 대한 배치가 [793] 밀리초에 완료되었습니다.

24-Apr-2019 23:51:09.911 정보 [main] org.apache.catalina.startup.HostConfig.deployDirectory 웹 애플리케이션 디렉토리 [/Users/tester/tomcat/webapps/ROOT]을(를) 배치합니다.

24-Apr-2019 23:51:09.969 정보 [main] org.apache.catalina.startup.HostConfig.deployDirectory 웹 애플리케이션 디렉토리 [/Users/tester/tomcat/webapps/ROOT]에 대한 배치가 [57] 밀리초에 완료되었습니다.

24-Apr-2019 23:51:09.969 정보 [main] org.apache.catalina.startup.HostConfig.deployDirectory 웹 애플리케이션 디렉토리 [/Users/tester/tomcat/webapps/host-manager]을(를) 배치합니다.

24-Apr-2019 23:51:10.019 정보 [main] org.apache.catalina.startup.HostConfig.deployDirectory 웹 애플리케이션 디렉토리 [/Users/tester/tomcat/webapps/host-manager]에 대한 배치가 [50] 밀리초에 완료되었습니다.

24-Apr-2019 23:51:10.022 정보 [main] org.apache.coyote.AbstractProtocol.start 프로토콜 핸들러 ["http-nio-8080"]을(를) 시작합니다.

24-Apr-2019 23:51:10.029 정보 [main] org.apache.coyote.AbstractProtocol.start 프로토콜 핸들러 ["ajp-nio-8009"]을(를) 시작합니다.

24-Apr-2019 23:51:10.031 정보 [main] org.apache.catalina.startup.Catalina.start 서버가 [1,252] 밀리초 내에 시작되었습니다.

24-Apr-2019 23:51:10.031 정보 [main] org.apache.catalina.startup.Catalina.start 서버가 [1,252] 밀리초 내에 시작되었습니다.

Almost every message is now served in Korean: "... 밀리초 내에 서버가 초기화되었습니다" (meaning "the server was initialized in ... ms"), "웹 애플리케이션 디렉토리" (meaning "Web Application Directory"), "배치가 ... 완료되었습니다" (meaning "Deployment ... completed"), etc.

A screenshot below was taken on the servlet example page for the HelloWorldExample in the default example web application. You can visit http://localhost:8080/, click on "Examples" menu on the top, click on the "Servlet Examples" link and finally click on the "Hello World" example link.

|

| The HelloWorld servlet example (/examples/servlets/servlet/HelloWorldExample) |

The Request Info servlet example ("RequestInfoExample") is served in Korean, too:

|

| The RequestInfo servlet example (/examples/servlets/servlet/RequestInfoExample) |

^C

25-Apr-2019 00:08:47.580 정보 [Thread-5] org.apache.coyote.AbstractProtocol.pause 프로토콜 핸들러 ["http-nio-8080"]을(를) 일시 정지 중

25-Apr-2019 00:08:47.580 정보 [Thread-5] org.apache.coyote.AbstractProtocol.pause 프로토콜 핸들러 ["http-nio-8080"]을(를) 일시 정지 중

25-Apr-2019 00:08:47.589 정보 [Thread-5] org.apache.coyote.AbstractProtocol.pause 프로토콜 핸들러 ["ajp-nio-8009"]을(를) 일시 정지 중

25-Apr-2019 00:08:47.595 정보 [Thread-5] org.apache.catalina.core.StandardService.stopInternal 서비스 [Catalina]을(를) 중지시킵니다.

25-Apr-2019 00:08:47.615 정보 [Thread-5] org.apache.coyote.AbstractProtocol.stop 프로토콜 핸들러 ["http-nio-8080"]을(를) 중지시킵니다.

25-Apr-2019 00:08:47.618 정보 [Thread-5] org.apache.coyote.AbstractProtocol.stop 프로토콜 핸들러 ["ajp-nio-8009"]을(를) 중지시킵니다.

25-Apr-2019 00:08:47.619 정보 [Thread-5] org.apache.coyote.AbstractProtocol.destroy 프로토콜 핸들러 ["http-nio-8080"]을(를) 소멸시킵니다.

25-Apr-2019 00:08:47.620 정보 [Thread-5] org.apache.coyote.AbstractProtocol.destroy 프로토콜 핸들러 ["ajp-nio-8009"]을(를) 소멸시킵니다.

$

$

3. How Was It Started?

As you may know, The Apache Software Foundataion (https://apache.org) has helped and nurtured great open source software projects and communities based on voluntary contributions. People get involved in the community through mailing lists of the project in which they found their interests. They ask questions or try to give answers to help other people; those who are interested in testing, development or documentation also discuss how to improve the software and process in the mailing lists and report bugs through the bug tracking systems. The community invite people as committers if someone has made quite amount of contributions in various forms such as bug reporting, helping others through mailing lists, providing patches, helping documentation, and so on. The committers makes changes in the source. Furthermore, committers who has shared with the vision of the community may become members of the Project Management Committee (PMC) and participate in decision making process for the project on behalf of the community. This governance model is known as The Apache Way. See https://www.apache.org/theapacheway/index.html for more detail.

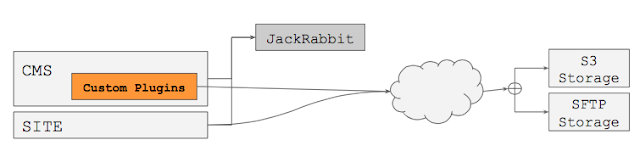

Anyway, the Apache Tomcat Translation initiative was started based on the community culture with voluntary contribution from individuals. On Nov. 12, 2018, Mark Thomas, the long time Apache Tomcat committer and PMC member, contributing a lot to the Apache Software Foundation too, posted the following message in the user mailing list (ref: https://lists.apache.org/thread.html/d53034694855fcc346e660fb688ddb7886574e0168d6eca70e4ece37@%3Cusers.tomcat.apache.org%3E). Long story short, to solve the fundamental problem that many people have met such as it being very hard to find which resource files to patch unless you're an expert of Apache Tomcat project, the PMC of Apache Tomcat initiated a POEditor project (see the screenshot below) to encourage more people to participate in the collective translation contributions, hoping to ship the contributed resources in Apache Tomcat 9 releases.

From: Mark Thomas

Subject: Translation help wanted

Date: 2018/11/12 11:49:51

List: users@tomcat.apache.org

All,

Apache Tomcat includes some translations for error messages and parts of

the user interface - primarily the Manager web application. We would

like to improve the coverage and quality of these translations.

Accordingly, the Tomcat project has been set up on POEditor, a web-based

service for managing the translation of resource files.

The aim is that anyone who wants to contribute to the translations (it

could be anything from fixing a typo in an existing translation to

adding support for a new language) can create an account and contribute.

If you would like to contribute in this way then the

The Tomcat project can be found here:

https://poeditor.com/join/project/NUTIjDWzrl

Anyone should be able to join up as a contributor. If you are

interested, please sign up and start contributing.

Note: All contributions will be taken as being made under the terms of

the Apache License version 2.

I'm aiming to export the translations on a regular basis to the Tomcat

source code. How regularly will depend on the rate of new/updated

translations but as a minimum, I'm aiming to get any updates into the

next Tomcat 9 release.

If you have any difficulties or questions, please ask here.

Thanks,

Mark

---------------------------------------------------------------------

To unsubscribe, e-mail: users-unsubscribe@tomcat.apache.org

For additional commands, e-mail: users-help@tomcat.apache.org

From: Mark Thomas

To: Tomcat Users List

Subject: Translations update

Date: 2018/11/21 09:58:15

List: users@tomcat.apache.org

Hi all,

I wanted to let you know about the amazing progress that is being made

on the Tomcat translations at

https://poeditor.com/join/project/NUTIjDWzrl

In the short time since this effort has started the community has

achieved the following:

- French has increased from 18% to 64% coverage

- Simplified Chinese has been added and has already reached 32% coverage

- Korean has been added and has reached 10% coverage

- German has increased from 2% to 7% coverage

- Brazilian Portuguese has been added and has reached 4% coverage

- Spanish has increased from 42% to 44% coverage

as well as a smaller number of additions and corrections to another 6

languages.

A big thank you to everyone who has contributed.

There is still lots to do so if you would like to help out please join

us at:

https://poeditor.com/join/project/NUTIjDWzrl

Thanks,

Mark

---------------------------------------------------------------------

To unsubscribe, e-mail: users-unsubscribe@tomcat.apache.org

For additional commands, e-mail: users-help@tomcat.apache.org

In less than 10 days, the portion of translated messages increased from 18% to 64% for French, 42% to 44% for Spanish, 2% to 7% for German. Even better, new languages were added, which had never been in the project before: 32% for Chinese; 10% for Korean; 4% for Brazillian Portugese.

As of today, April 28, 2019, while I'm writing this article, more than 99% of messages were translated into Korean, and more than 140 volunteers made more than 3,044 contributions in 17 different languages! And the collective work continues. See the POEditor project homepage for detail: https://poeditor.com/join/project/NUTIjDWzrl.

4. And It Continues

Have you ever seen weird translations in software messages in your IT career? Even if the English messages were translated into your language, haven't you ever seen that some messages give an awkward feeling to you sometimes?

Sharing the concerns, the Apache Tomcat community suggests that we should try to fix this problem together. The suggestion comes not just as an abstract principle, but very concrete and practical solution: the POEditor project (https://poeditor.com/join/project/NUTIjDWzrl). It's never difficult; it's really easy to edit. If you don't understand why the message is used there on which context, you can also ask questions through commenting in the POEditor project. You can share ideas together. Committers may give answers to your questions, or you can discourse with other translators, too.

If you want to join in the common experience helping each other in the community, feel free to join the Apache Tomcat POEditor project (https://poeditor.com/join/project/NUTIjDWzrl). Choose the language you want to translate into.

Also, to join the users' or developers' mailing lists to ask questions or discuss on anything, see https://tomcat.apache.org/lists.html.

In Today's World where everything is connected to each other digitally, people, located far from each other geographically with time and language differences, start contributing to the open source projects in which they find their interests. It is like people having been collaborating to build shared reservoirs and planting trees on the dams to protect them in commons for thousands of years. People, who have already experienced, now try to figure out how to encourage other people to participate in more easily. Such easy tools as POEditor help people get involved in easier and better. They know that it becomes easier together and they can achieve more benefits in the community together.