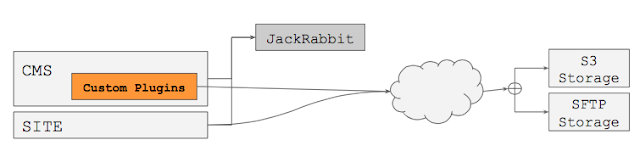

Surprisingly, many people have tried to avoid JCR storage for binary data if the amount is going to be really huge. Instead of using JCR, in many cases, they have tried to implement a custom (UI) module to store binary data directly to a different storage such as SFTP, S3 or WebDAV through specific backend APIs.

It somewhat makes sense to separate binary data store if the amount is going to be really huge. Otherwise, the size of the database used by JCR can grow too much, which makes it harder and more costly to maintain, backup, restore and deploy as time goes by. Also, if your application requires to serve the binary data in a very scalable way, it will be more difficult with keeping everything in single database than separating the binary data store somewhere else.

But there is a big disadvantage with this custom (UI) module approach. If you store a PDF file through a custom (UI) module, you won't be able to search the content through standard JCR Query API any more because JCR (Jackrabbit) is never involved in storing/indexing/retrieving the binary data. If you could use JCR API to store the data, then Apache Jackrabbit could have indexed your binary node automatically and you could have been able to search the content very easily. Being unable to search PDF documents through standard JCR API could be a big disappointment.

Let's face the initial question again: Can't we store huge amount of binary data in JCR?

Actually... yes, we can. We can store huge amount of binary data through JCR in a standard way if you choose a right Apache Jackrabbit DataStore for a different backend such as SFTP, WebDAV or S3. Apache Jackrabbit was designed in a way to be able to plug in a different DataStore, and has provided various DataStore components for various backends. As of Apache Jackrabbit 2.13.2 (released on August, 29, 2016), it supports even Apache Commons VFS based DataStore component which enables to use SFTP and WebDAV as backend storage. That's what I'm going to talk about here.

DataStore Component in Apache Jackrabbit

Before jumping into the details, let me try to explain what DataStore was designed for in Apache Jackrabbit first. Basically, Apache Jackrabbit DataStore was designed to support large binary store for performance, reducing disk usage. Normally all node and property data is stored through PersistenceManager, but for relatively large binaries such as PDF files are stored through DataStore component separately.DataStore enables:

- Fast copy (only the identifier is stored by PersistenceManager, in database for example),

- No blocking in storing and reading,

- Immutable objects in DataStore,

- Hot backup support, and

- All cluster nodes using the same DataStore.

Please see https://wiki.apache.org/jackrabbit/DataStore for more detail. Especially, please note that a binary data entry in DataStore is immutable. So, a binary data entry cannot be changed after creation. This makes it a lot easier to support caching, hot backup/restore and clustering. Binary data items that are no longer used will be deleted automatically by the Jackrabbit Garbage collector.

Apache Jackrabbit has several DataStore implementations as shown below:

FileDataStore uses a local file system, DbDataStore uses a relational databases, and S3DataStore uses Amazon S3 as backend. Very interestingly, VFSDataStore uses a virtual file system provided by Apache Commons VFS module.

FileDataStore cannot be used if you don't have a stable shared file system between cluster nodes. DbDataStore has been used by Hippo Repository by default because it can work well in a clustered environment unless the binary data increases extremely too much. S3DataStore and VFSDataStore look more interesting because you can store binary data into an external storage. In the following diagrams, binary data is handled by Jackrabbit through standard JCR APIs, so it has a chance to index even binary data such as PDF files. Jackrabbit invokes S3DataStore or VFSDataStore to store or retrieve binary data and the DataStore component invokes its internal Backend component (S3Backend or VFSBackend) to write/read to/from the backend storage.

One important thing to note is that both S3DataStore and VFSDataStore extend CachingDataStore of Apache Jackrabbit. This gives a big performance benefit because a CachingDataStore caches binary data entries in local file system not to communicate with the backend if unnecessary.

As shown in the preceding diagram, when Jackrabbit needs to retrieve a binary data entry, it invokes DataStore (a CachingDataStore such as S3DataStore or VFSDataStore, in this case) with an identifier. CachingDataStore checks if the binary data entry already exists in its LocalCache first. [R1] If not found there, it invokes its Backend (such as S3Backend or VFSBackend) to read the data from the backend storage such as S3, SFTP, WebDAV, etc. [B1] When reading the data entry, it stores the entry into the LocalCache as well and serve the data back to Jackrabbit. CachingDataStore keeps the LRU cache, LocalCache, up to 64GB by default in a local folder that can be changed in the configuration. Therefore, it should be very performant when a binary data entry is requested multiple times because it is most likely to be served from the local file cache. Serving a binary data from a local cached file is probably much faster than serving data using DbDataStore since DbDataStore doesn't extend CachingDataStore nor have a local file cache concept at all (yet).

Using VFSDataStore in a Hippo CMS Project

To use VFSDataStore, you have the following properties in the root pom.xml: <properties>

<!--***START temporary override of versions*** -->

<!-- ***END temporary override of versions*** -->

<com.jcraft.jsch.version>0.1.53</com.jcraft.jsch.version>

<-- SNIP -->

</properties>

Apache Jackrabbit VFSDataStore is supported since 2.13.2. You also need to add the following dependencies in cms/pom.xml:

<!-- Adding jackrabbit-vfs-ext -->

<dependency>

<groupId>org.apache.jackrabbit</groupId>

<artifactId>jackrabbit-vfs-ext</artifactId>

<version>${jackrabbit.version}</version>

<scope>runtime</scope>

<!--

Exclude jackrabbit-api and jackrabbit-jcr-commons since those were pulled

in by Hippo Repository modules.

-->

<exclusions>

<exclusion>

<groupId>org.apache.jackrabbit</groupId>

<artifactId>jackrabbit-api</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.jackrabbit</groupId>

<artifactId>jackrabbit-jcr-commons</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- Required to use SFTP VFS2 File System -->

<dependency>

<groupId>com.jcraft</groupId>

<artifactId>jsch</artifactId>

<version>${com.jcraft.jsch.version}</version>

</dependency>

And, we need to configure VFSDataStore in conf/repository.xml like the following example:

<Repository>

<!-- SNIP -->

<DataStore class="org.apache.jackrabbit.vfs.ext.ds.VFSDataStore">

<param name="config" value="${catalina.base}/conf/vfs2.properties" />

<!-- VFSDataStore specific parameters -->

<param name="asyncWritePoolSize" value="10" />

<!--

CachingDataStore specific parameters:

- secret : key to generate a secure reference to a binary.

-->

<param name="secret" value="123456789"/>

<!--

Other important CachingDataStore parameters with default values, just for information:

- path : local cache directory path. ${rep.home}/repository/datastore by default.

- cacheSize : The number of bytes in the cache. 64GB by default.

- minRecordLength : The minimum size of an object that should be stored in this data store. 16KB by default.

- recLengthCacheSize : In-memory cache size to hold DataRecord#getLength() against DataIdentifier. One item for 140 bytes approximately.

-->

<param name="minRecordLength" value="1024"/>

<param name="recLengthCacheSize" value="10000" />

</DataStore>

<!-- SNIP -->

</Repository>

The VFS connectivity is configured in ${catalina.base}/conf/vfs2.properties like the following for instance:

baseFolderUri = sftp://tester:secret@localhost/vfsds

So, the VFSDataStore uses SFTP backend storage in this specific example as configured in the properties file to store/read binary data in the end.

If you want to see more detailed information, examples and other backend usages such as WebDAV through VFSDataBackend, please visit my demo project here:

Update: Note that Hippo CMS 12.x pulls in Apache Jackrabbit 14.0+. Therefore, you can simply use ${jackrabbit.version} for the dependencies mentioned in this article.

Configuration for S3DataStore

In case you want to use S3DataStore instead, you need the following dependency: <!-- Adding jackrabbit-aws-ext -->

<dependency>

<groupId>org.apache.jackrabbit</groupId>

<artifactId>jackrabbit-aws-ext</artifactId>

<!-- ${jackrabbit.version} or a specific version like 2.14.0-h2. -->

<version>${jackrabbit.version}</version>

<scope>runtime</scope>

<!--

Exclude jackrabbit-api and jackrabbit-jcr-commons since those were pulled

in by Hippo Repository modules.

-->

<exclusions>

<exclusion>

<groupId>org.apache.jackrabbit</groupId>

<artifactId>jackrabbit-api</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.jackrabbit</groupId>

<artifactId>jackrabbit-jcr-commons</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- Consider using the latest AWS Java SDK for latest bug fixes. -->

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-java-sdk-s3</artifactId>

<version>1.11.95</version>

</dependency>

And, we need to configure S3DataStore in conf/repository.xml like the following example (excerpt from https://github.com/apache/jackrabbit/blob/trunk/jackrabbit-aws-ext/src/test/resources/repository_sample.xml):

<Repository>

<!-- SNIP -->

<DataStore class="org.apache.jackrabbit.aws.ext.ds.S3DataStore">

<param name="config" value="${catalina.base}/conf/aws.properties"/>

<param name="secret" value="123456789"/>

<param name="minRecordLength " value="16384"/>

<param name="cacheSize" value="68719476736"/>

<param name="cachePurgeTrigFactor" value="0.95d"/>

<param name="cachePurgeResizeFactor" value="0.85d"/>

<param name="continueOnAsyncUploadFailure" value="false"/>

<param name="concurrentUploadsThreads" value="10"/>

<param name="asyncUploadLimit" value="100"/>

<param name="uploadRetries" value="3"/>

</DataStore>

<!-- SNIP -->

</Repository>

The AWS S3 connectivity is configured in ${catalina.base}/conf/aws.properties in the above example.

Please find an example aws.properties of in the following and adjust the configuration for your environment:

- https://github.com/apache/jackrabbit/blob/trunk/jackrabbit-aws-ext/src/test/resources/aws.properties

Comparisons with Different DataStores

DbDataStore (the default DataStore used by most Hippo CMS projects) provides a simple clustering capability based on a centralized database, but it could increase the database size and as a result it could increase maintenance/deployment cost and make it relatively harder to use hot backup/restore if the amount of binary data becomes really huge. Also, because DbDataStore doesn't maintain local file cache for the "immutable" binary data entries, it is relatively less performant when serving binary data, in terms of binary data retrieval from JCR. Maybe you can argue that application is responsible for all the cache controls in order not to burden JCR though.S3DataStore uses Amazon S3 as backend storage, and VFSDataStore uses a virtual file system provided by Apache Commons VFS module. They obviously help reduce the database size, so system administrators could save time and cost in maintenance or new deployments with these DataStores. They are internal plugged-in components as designed by Apache Jackrabbit, so clients can simply use standard JCR APIs to write/read binary data. More importantly, Jackrabbit is able to index the binary data such as PDF files internally to Lucene index, so clients can make standard JCR queries to retrieve data without having to implement custom code depending on specific backend APIs.

One of the notable differences between S3DataStore and VFSDataStore is, the former requires a cloud-based storage (Amazon S3) which might not be allowed in some highly secured environments, whereas the latter allows to use various and cost-effective backend storages including SFTP and WebDAV that can be deployed wherever they want to have. You can take full advantage of cloud based flexible storage with S3DataStore though.

Summary

Apache Jackrabbit VFSDataStore can give a very feasible, cost-effective and secure option in many projects when it is required to host huge amount of binary data in JCR. VFSDataStore enables to use SFTP, WebDAV, etc. as backend storage at a moderate cost, and enables to deploy wherever they want to have. Also, it allows to use standard JCR APIs to read and write binary data, so it should save more development effort and time than implementing a custom (UI) plugin to communicate directly with a specific backend storage.Other Materials

I have once presented this topic to my colleagues. I'd like to share that with you as well.Please leave a comment if you have any questions or remarks.